1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

|

'''

在 IMDB 情感分类任务上训练双向 LSTM。

Output after 4 epochs on CPU: ~0.8146. Time per epoch on CPU (Core i7): ~150s.

在 CPU 上经过 4 个轮次后的输出:〜0.8146。 CPU(Core i7)上每个轮次的时间:〜150s。

'''

import os

import numpy as np

import tensorflow as tf

import ssl

from tensorflow.keras.preprocessing import sequence

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Dropout, Embedding

from tensorflow.keras.layers import LSTM,Bidirectional

from tensorflow.keras.optimizers import Adam,SGD

from tensorflow.keras.datasets import imdb

from tensorflow.keras.utils import plot_model

ssl._create_default_https_context = ssl._create_unverified_context

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

os.environ['KMP_DUPLICATE_LIB_OK'] = "TRUE"

np.set_printoptions(threshold=np.inf)

if 1 == 0:

gpus = tf.config.experimental.list_physical_devices(device_type='GPU')

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpu, True)

if 1==0:

tf.config.experimental.set_virtual_device_configuration(gpu, [

tf.config.experimental.VirtualDeviceConfiguration(memory_limit=800)])

if 1==1:

config = tf.compat.v1.ConfigProto()

config.gpu_options.allow_growth = True

session =tf.compat.v1.InteractiveSession(config=config)

import pylab as plt

max_features = 20000

maxlen = 80

batch_size = 32

print ("maxlen %d batch size %d " % (maxlen,batch_size))

print('Loading data...')

(x_train, y_train), (x_test, y_test) = imdb.load_data('./imdb.npz',num_words=max_features)

print(len(x_train), 'train sequences')

print(len(x_test), 'test sequences')

print('Pad sequences (samples x time)')

x_train = sequence.pad_sequences(x_train, maxlen=maxlen)

x_test = sequence.pad_sequences(x_test, maxlen=maxlen)

print('x_train shape:', x_train.shape)

print('x_test shape:', x_test.shape)

y_train = np.array(y_train)

y_test = np.array(y_test)

model = Sequential()

model.add(Embedding(max_features, 128, input_length=maxlen))

model.add(Bidirectional(LSTM(64)))

model.add(Dropout(0.5))

model.add(Dense(1, activation='sigmoid'))

optimizer = Adam(1e-4)

model.compile(optimizer=optimizer, loss='binary_crossentropy', metrics=['accuracy'])

model.summary()

plot_model(model, to_file='./Figs/test2.png',show_shapes=True)

print('Train...')

os.makedirs("logs",exist_ok=1==1)

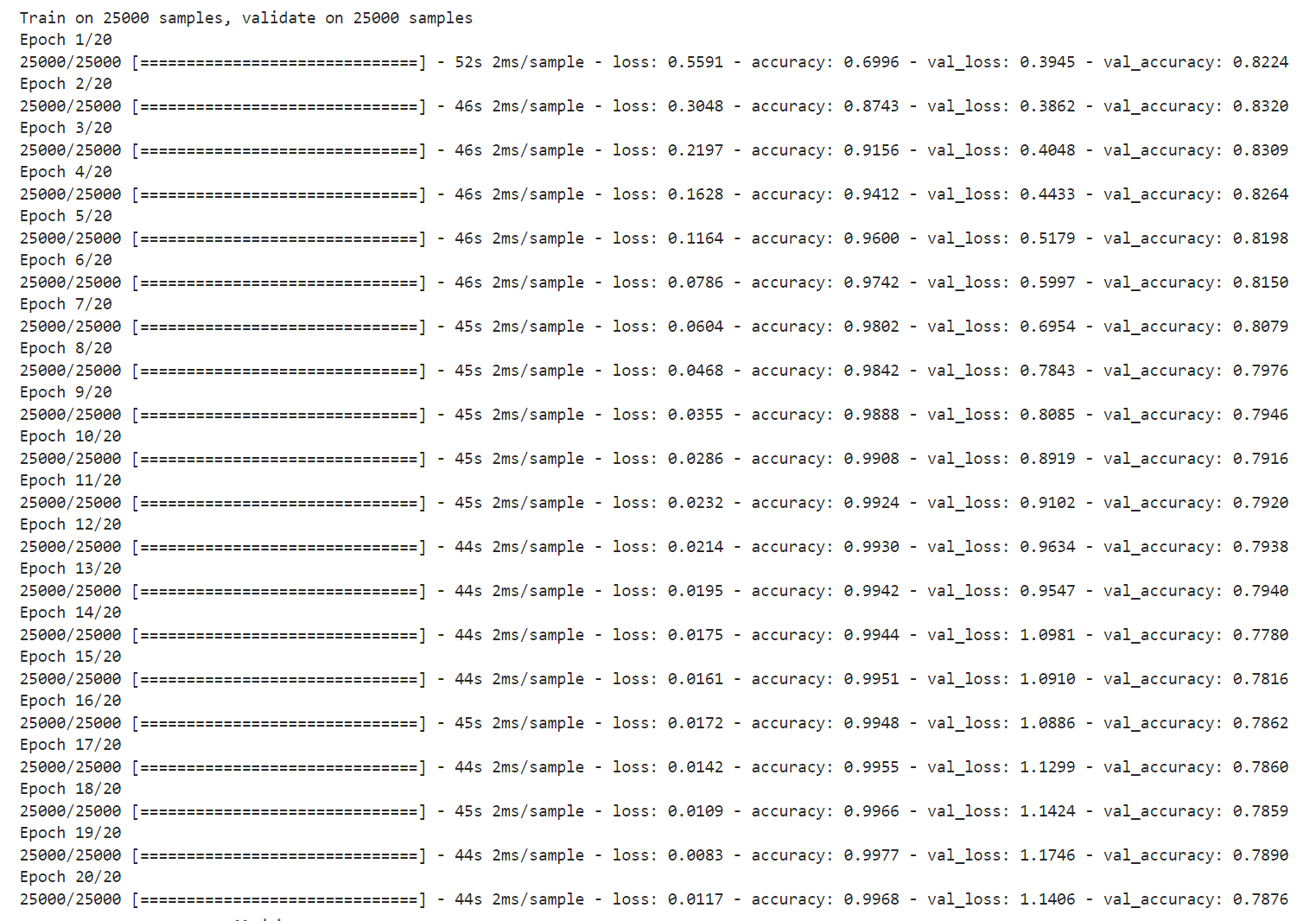

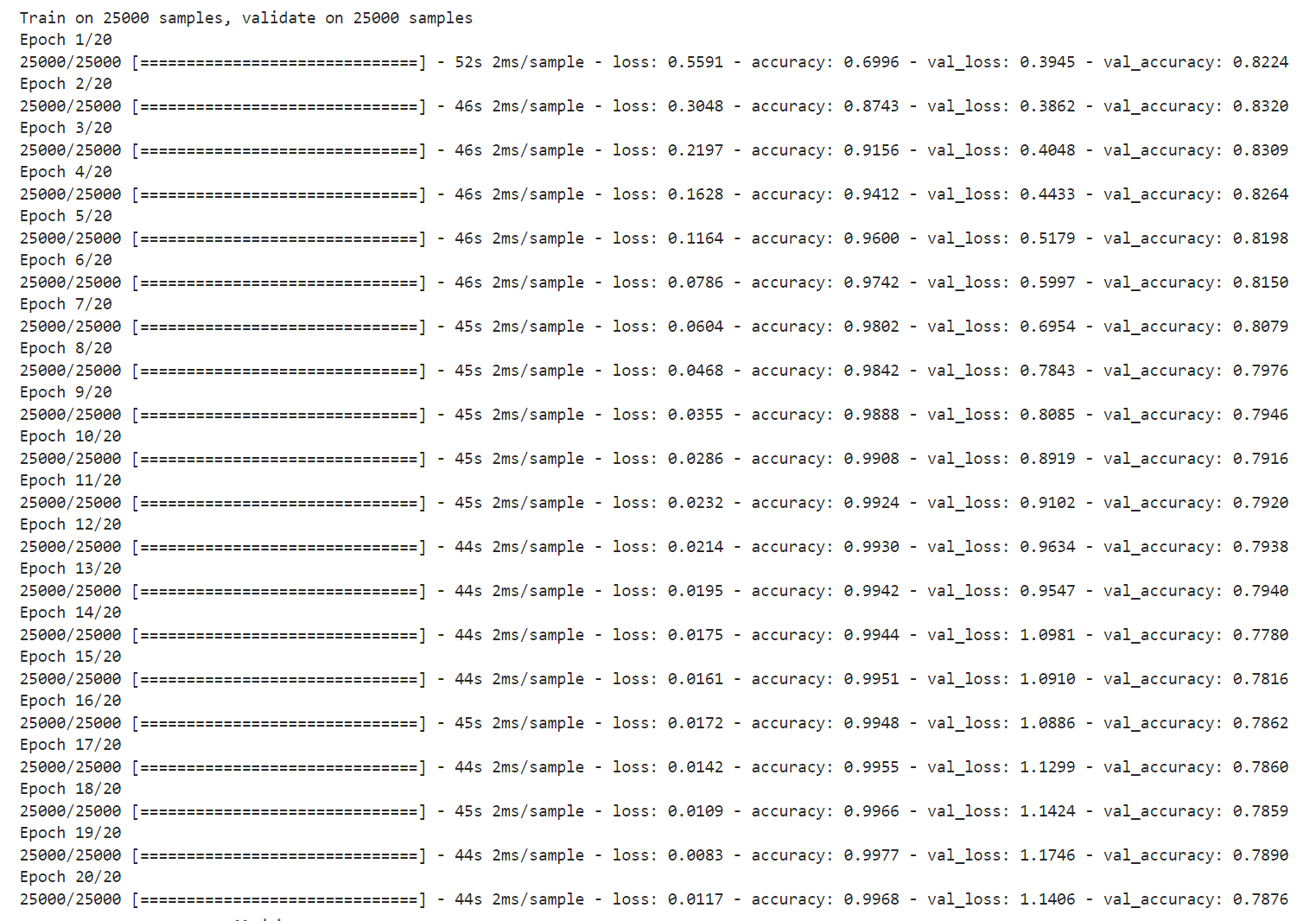

history=model.fit(x_train, y_train,

batch_size=batch_size,

epochs=20,

validation_data=(x_test, y_test) )

os.makedirs("Figs",exist_ok=1==1)

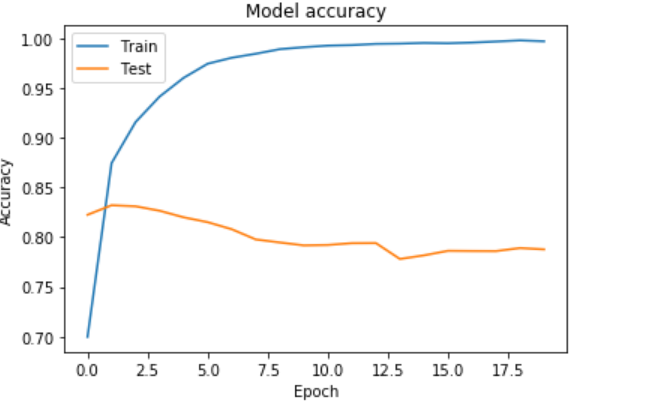

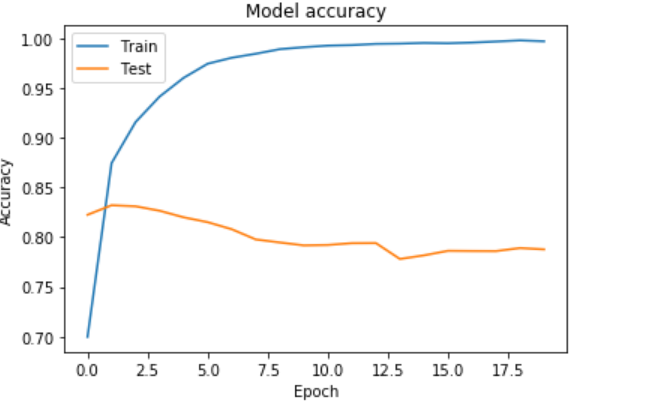

plt.plot(history.history['accuracy'])

plt.plot(history.history['val_accuracy'])

plt.title('Model accuracy')

plt.ylabel('Accuracy')

plt.xlabel('Epoch')

plt.legend(['Train', 'Test'], loc='upper left')

plt.savefig('./Figs/test2_accuracy.png')

plt.show()

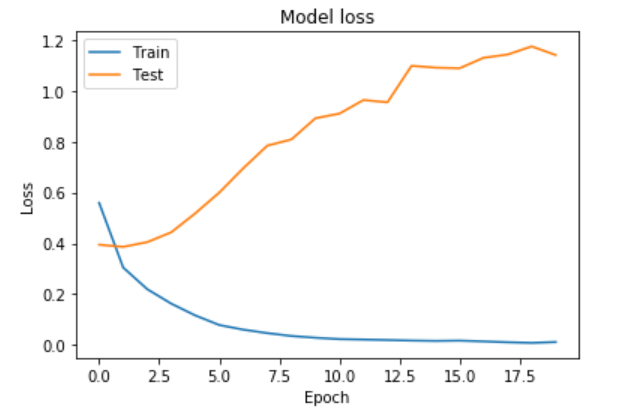

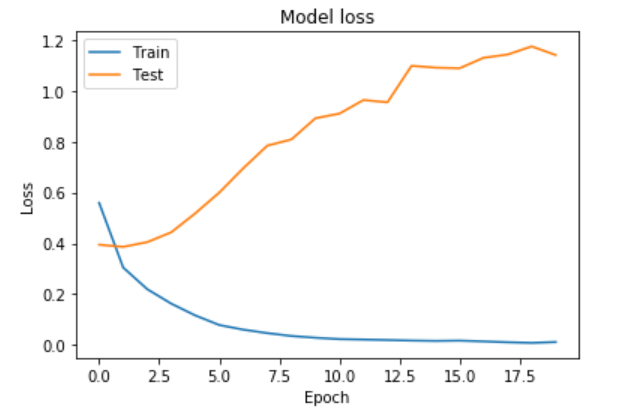

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('Model loss')

plt.ylabel('Loss')

plt.xlabel('Epoch')

plt.legend(['Train', 'Test'], loc='upper left')

plt.savefig('./Figs/test2_loss.png')

plt.show()

|